Technical Program

December 19th afternoon: Registration

December 20-21th full day: Invited talks

December 22th morning: Invited talks

Banquet will be arranged on December 20th night for all full registered members.

|

|

Invited talks

Imputation of Time Series with Missing Values under Heavy-Tailed AR Model via Stochastic EM

|

Daniel P.Palomar |

Many applications in big data deal with time series that have missing values due to multiple reasons (e.g., data record failure, unexpected data loss, outliers). Unfortunately, most algorithms require complete data and can only be applied after either discarding the missing values or interpolating somehow (e.g., with splines or polynomials). These are heuristic methods to deal with missing values and, in fact, destroy the statistics of the original time series. In addition, in many big data applications the observations do not follow the Gaussian distribution but instead have heavy tails, which only makes the analysis more difficult. We will consider an autoregressive (AR) model for the time series and will present an imputation method that preserves the statistics of the original time series. The first part consists of estimating the parameters of the original heavy-tailed distribution with missing values for which we resort to stochastic EM. The second part consists of imputing the missing values so that the original statistics are preserved. We will present numerical simulations in the area of financial data.

Slides

Online Resource Allocation with Applications to Revenue Management

|

David Simchi-Levi |

Online resource allocation is a fundamental problem in OR and CS with applications such as offering products to customers, distributing jobs to candidates, assigning advertisers to ad slots, and matching drivers to passengers. These problems can be abstracted as follows: there are fixed resources, each of which can be sold at multiple known prices. These resources must be allocated on-the-fly, without assuming anything about future demand. In this talk we cover the CS and OR literature on the problem and in particular focus on two techniques: exploration and exploitation methods, as well as competitive analysis.

In the latter case, we review new algorithms that achieve tight competitive ratios under the integral or asymptotic settings. Our algorithms are simple, intuitive and robust and our competitive ratios are provably optimal, for every possible set of prices.

In the former case, we discuss an efficient and effective dynamic pricing algorithm, which builds upon the Thompson sampling algorithm used for multi-armed bandit problems by incorporating inventory constraints into the pricing decisions. The algorithm proves to have both strong theoretical performance guarantees as well as promising numerical performance results when compared to other algorithms developed for the same setting.

Finally, we compare the performance of both techniques, exploration and exploitation methods and competitive analysis, with real-world and synthetic data from various retail applications.

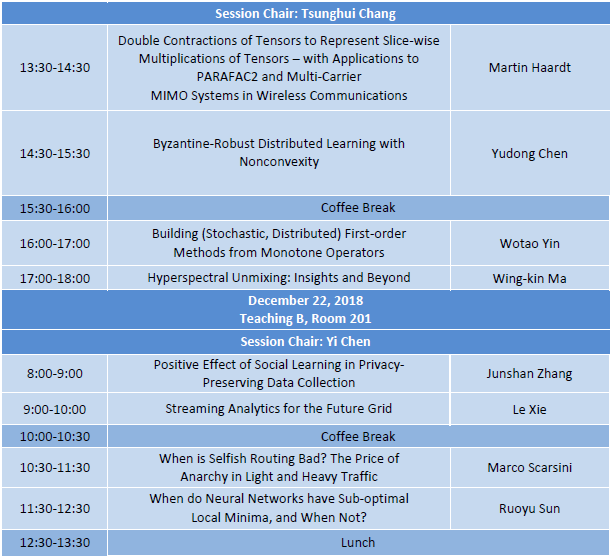

Positive Effect of Social Learning in Privacy-Preserving Data Collection

|

Junshan Zhang |

We explore a model where users share (noisy version of) their personal data with social friends. Based on both her personal data and the noisy data from her friends, each user makes strategic decisions to report privacy-preserving data. We develop a Bayesian game theoretic framework to study the impact of social learning on users’ data reporting strategies and devise the payment mechanism for the data collector. Our findings reveal that both the data collector and the users can benefit from social learning under some conditions.

When is Selfish Routing Bad? The Price of Anarchy in Light and Heavy Traffic

|

Marco Scarsini |

This paper examines the behavior of the price of anarchy as a function of the traffic inflow in nonatomic congestion games with multiple origin-destination (O/D) pairs. Empirical studies in real-world networks show that the price of anarchy is close to 1 in both light and heavy traffic, thus raising the question: can these observations be justified theoretically? We first show that this is not always the case: the price of anarchy may remain a positive distance away from 1 for all values of the traffic inflow, even in simple three-link networks with a single O/D pair and smooth, convex costs. On the other hand, for a large class of cost functions (including all polynomials), the price of anarchy does converge to 1 in both heavy and light traffic, irrespective of the network topology and the number of O/D pairs in the network. We also examine the rate of convergence of the price of anarchy, and we show that it follows a power law whose degree can be computed explicitly when the network’s cost functions are polynomials.

Slides

Low-Resolution Quantization in Wireless Communications

|

A.Lee Swindlehurst |

The use of one-bit analog-to-digital converters (ADCs) has been proposed to reduce the otherwise overwhelming cost and energy consumption associated with communication systems employing large number of antennas (i.e., “massive MIMO”), especially those that operate with very large bandwidths and thus very high sampling rates. This talk focuses on the impact (good and bad) associated with very coarse quantization in such systems. Beyond the standard one-bit ADC, we also explore the possibility of using a spatial-analog of the well-known one-bit Sigma-Delta ADC for temporally oversampled systems. This so-called “Spatial Sigma Delta” implementation shapes the spatial spectrum of the quantization noise, reducing its impact at lower spatial frequencies (e.g., near broadside for a uniform linear array). This can provide a significant benefit when an oversampled array is employed, or when the signals from the users of interest are known to be located away from the end-fire of the array. We study the performance of such systems under realistic operating characteristics and suggest how they might be deployed in cellular settings.

Slides

Learning to Benchmark

|

Alfred Hero |

We propose a framework for learning the intrinsic difficulty of classifying a labeled training sample, based on empirical estimation of the minimal achievable classification error, i.e., the Bayes error rate. We call this meta-learning problem “learning to benchmark” with the objective of finding low complexity and statistically consistent estimates of the Bayes mis-classification error rate that bypasses the problem of approximating the Bayes-optimal classi fier. The Bayes classification error probability can be represented as an information divergence measure and an ensemble of geometric learners will be shown to solve the learning to benchmark problem with optimal (linear) rates of implementation complexity and mean squared error convergence. This is joint work with Li Xu and Morteza Noshad.

Slides

Online Learning for Algorithmic Bidding

|

Lang Tong |

We consider the problem of algorithmic bidding in repeated auctions of goods with random clearing prices and payoffs. This problem arises naturally in electricity markets where market participants arbitrage electricity across time or locations. Online advertising is another example where advertisers bid to buy ad spaces online, not knowing the payoffs of such ads buys. In both cases, the price and the value of the goods can be modeled as random variables with unknown joint distributions. A bidder needs to choose what goods to bid and their bidding prices subject to budget constraints. A bidder can be risk neutral or risk averse.

In this talk, we present an online learning approach to algorithmic bidding in repeated auctions. Using virtual trading in a two-settlement market as an example, we develop an online learning algorithm that maximizes the cumulative return from the bidding strategy under both risk-neutral and risk-averse performance measures. It is shown that the expected payoff of the proposed algorithm converges, with an almost optimal convergence rate, to the expected payoff of the global optimal policy corresponding to the case when the underlying price distribution is known. We demonstrate that the proposed strategy outperforms existing machine learning benchmarks and achieves significant profit consistently based on historical data from the NYISO and PJM markets over the eleven-year period between 2006 and 2016.

This is a joint work with Sevi Baltaoglu and Qing Zhao.

Random Initialization and Implicit Regularization in Nonconvex Statistical Estimation

|

Yuxin Chen |

Recent years have seen a flurry of activities in designing provably efficient nonconvex procedures for solving statistical estimation / learning problems. Due to the highly nonconvex nature of the empirical loss, state-of-the-art procedures often require suitable initialization and proper regularization (e.g. trimming, regularized cost, projection) in order to guarantee fast convergence. For vanilla procedures such as gradient descent, however, prior theory is often either far from optimal or completely lacks theoretical guarantees.

This talk is concerned with a striking phenomenon arising in two nonconvex problems (i.e. phase retrieval and matrix completion): even in the absence of careful initialization, proper saddle escaping, and/or explicit regularization, gradient descent converges to the optimal solution within a logarithmic number of iterations, thus achieving near-optimal statistical and computational guarantees at once. All of this is achieved by exploiting the statistical models in analyzing optimization algorithms, via a leave-one-out approach that enables the decoupling of certain statistical dependency between the gradient descent iterates and the data. As a byproduct, for noisy matrix completion, we demonstrate that gradient descent achieves near-optimal entrywise error control.

This is joint work with Cong Ma, Kaizheng Wang, Yuejie Chi, and Jianqing Fan.

Byzantine-Robust Distributed Learning with Nonconvexity

|

Yudong Chen |

In large-scale distributed learning, security issues have become increasingly important. Particularly in a decentralized environment, some computing units may behave abnormally, or even exhibit Byzantine failures–arbitrary and potentially adversarial behavior. In this talk, we develop ByzantinePGD, a distributed learning algorithm that is provably robust against such failures. At the core of ByzantinePGD is an efficient procedure for optimizing a non-convex loss function given only corrupted information of the gradient. We show that our algorithm converges to a local optimum of the population loss, escaping all saddle points. Under statistical settings of the data, we further show that our algorithm achieves near-optimal statistical error rates in both low and high dimensional regimes.

Streaming Analytics for the Future Grid

|

Le Xie |

How to conduct real-time analytics of streaming measurement data in the power grid? This talk offers a dynamic systems approach to utilizing data of different time scale for improved monitoring of the grid cyber and physical security. The first example of the talk presents how to leverage synchrophasor data dimensionality reduction and Robust Principal Component Analysis for early anomaly detection, visualization, and localization. The second example presents an online framework to detect cyber attacks on automatic generation. A cyber attack detection algorithm is designed based on the approach of Dynamic Watermarking. The detection algorithm provides a theoretical guarantee of detection of cyber attacks launched by sophisticated attackers possessing extensive knowledge of the physical and statistical models of targeted power systems. The underlying theme of the work suggests the importance of integrating data with dynamic context-aware models in the smart grid.

Double Contractions of Tensors to Represent Slice-wise Multiplications of Tensors – with Applications to PARAFAC2 and Multi-Carrier MIMO Systems in Wireless Communications

|

Martin Haardt |

The slice-wise multiplication of two tensors is required in a variety of tensor decompositions (such as PARAFAC2 and PARATUCK2) and is encountered in many big data applications, including the analysis of multidimensional biomedical data (EEG, MEG, etc.) or multi-carrier MIMO systems. In this talk, we propose a new tensor representation that is not based on a slice-wise (matrix) description, but can be represented by a double contraction of two tensors. Such a double contraction of two tensors can be efficiently calculated via generalized unfoldings. It leads to new explicit tensor models of the investigated system that do not depend on the chosen unfolding and reveal the tensor structure of the data model (such that all possible unfoldings can be seen at the same time). As an example, we express the PARAFAC2 decomposition in terms of this new explicit tensor description (Tucker model) utilizing the double contraction operator. Moreover, we show that this explicit tensor description opens several efficient ways to compute the PARAFAC2 decomposition.

Furthermore, we apply this new concept to the design of new receivers for multi-carrier MIMO systems in wireless communications. In particular, we consider MIMO OFDM systems with and without Khatri-Rao coding. The proposed receivers exploit the channel correlation between adjacent subcarriers, require the same amount of training symbols as traditional OFDM techniques, but have an improved performance in terms of the symbol error rate. The tensor structure can also be exploited via more iterations to decrease the symbol error rate even further. We also show how the training overhead can be reduced dramatically for operations in the millimeter wave band by exploiting the sparsity of the multidimensional channel tensor explicitly.

Slides

Stein's Method for Big-Data Systems: From Learning Queues to Q-Learning

|

Lei Ying |

Big-data analytics involves data collection, data processing, and mining of and learning from data. Stochasticity is ubiquitous in big-data analytics, and often one of the main obstacles in the design, control and analysis of big-data systems. In the first part of this talk, I will review some open problems in private-data market design, cloud computing and reinforcement learning related to stochastic analysis. I will then introduce an analytical framework, inspired by Stein’s method in probability theory, for analyzing stochastic big-data systems. I will present two previously open problems which we solved recently: (i) how much information is needed to balance the load in cloud computing systems to achieve asymptotically zero queueing delay? (ii) how many samples are needed in reinforcement learning to learn value functions or Q-functions with function approximation?

Integrative Data Science Methods to Elucidate Pharmacologic Response Mechanisms

|

Brian D. Athey |

New insights into the architecture and dynamics of the non-coding regulatory genome have transformed our understanding of the cornerstones of classical pharmacogenomics–pharmacokinetics (PK) and pharmacodynamics (PD). The newly emerging concept of the “pharmacoepigenome”, broadly enabled by the 4D Nucleome Concept, involves regulators of gene expression (termed the “Regulome”), including enhancers, promoters and RNAs located in the noncoding genome, and is characterized by a hierarchy of stereotypic transcriptional domains in which variation profoundly impacts drug response in humans. Transcriptional control consists of canonical 3D structures that include topologically associated domains (TADs). Different TADs are activated or suppressed by drugs in a cell-type specific manner. Drug-disease networks have been found to be tightly coupled so that gene variants significantly associated with a disease are identical to, or are found within, the same regulatory networks that determine medication-based therapeutic outcome. Thus, mutations that disrupt the spatial hierarchy of transcription within euchromatin not only convey disease risk but also concomitant variability in drug and pharmacogenomic response. Pathways containing disease risk, drug response and concomitant adverse event variants has the potential to better inform therapeutic options for patients based on the emerging pharmacological basis of drug response and adverse drug events. Examples of current and future applications of Machine Learning in pharmacogenomics, include 1) identification of novel regulatory variants located in non-coding domains of the genome and their function as applied to pharmacoepigenomics; 2) patient stratification from medical records; and 3) the mechanistic prediction of drug response, targets and their interactions. In addition, we anticipate that in the future, deep learning will be widely used to predict personalized drug response and optimize medication selection and dosing, using knowledge extracted from large and complex molecular, epidemiological, clinical and demographic datasets. Mathematical, statistical, algorithmic, and infrastructural issues with this kind of integrative biomedical data science analysis will be explored to provide for a robust discussion in the conference.

Building (Stochastic, Distributed) First-order Methods from Monotone Operators

|

Wotao Yin |

In this talk, we use monotone operator theory to derive and analyze a wide range of classical and modern convex optimization algorithms, including stochastic (randomized), parallel, distributed, and decentralized methods that are well-suited for large-scale and big data problems. The list covers algorithms includes proximal-gradient, (proximal) method of multipliers, alternating minimization, PDHG, Chambolle Pock, (standard, proximal, and linearized) ADMM, and PD3O. Finite-sum and block-coordinate-friendly properties are used to develop parallel and asynchronous methods. These methods are presented in a unified and streamlined manner using only a few mathematical concepts from monotone operator theory.

Talk Co-author: Ernest K. Ryu.

Finding and Removing Human Biases from AI

|

James Zou |

Visualization and exploration of high-dimensional data is a ubiquitous challenge in data science. Widely-used techniques such as principal component analysis (PCA) and autoencoder aim to identify dominant trends in one dataset. However, in many settings we have datasets collected in different conditions, e.g. a treatment and a control experiment, and we are interested in visualizing and exploring patterns that are specific to one dataset and not shared with other data. We propose a new framework of contrastive learning, which identifies low-dimensional structures that are enriched in a dataset relative to comparison data. In a wide variety of experiments, we demonstrate that contrastive learning with a background dataset enables us to visualize dataset-specific patterns missed by PCA and other standard methods. We further provide a geometric interpretation of contrastive learning and strong mathematical guarantees.

When do Neural Networks have Sub-optimal Local Minima, and When not?

|

Ruoyu Sun |

To explain the recent success of neural networks, researchers have conjectured that all local minima are global minima despite the non-convexity of the problem. Is this really true? Is this just hand-wavy intuition that is roughly true in special cases or can be a rigorous result in a broad setting?

In this talk, instead of explaining “why neural-nets are good”, we try to understand “when neural-nets are good, and when not” –with a restricted definition of “good” by “every local-min is global-min”. We focus on the binary classification problem and discuss how architecture and data affect the landscape. On the positive side, we prove that no bad local minima exist under reasonable assumptions on the neuron types, the neural-net structure, the loss function, and the dataset. On the negative side, we provide dozens of counterexamples that show the necessity of most assumptions.

Our approach can be viewed as a game of “local-min attack” and “defense”. An attacker tries to construct examples that bad local minima exist, and the defender modifies the setting to eliminate bad local minima. For instance, the attacker constructs bad local minima for 1-hidden-layer ReLU network with linearly separable data, then the defender proves that smooth versions of ReLU eliminate them. At last, we present a strong defense consisting of a special neuron and a special regularizer that can eliminate bad local minima for a deep neural-net in the realizable case.

Joint work with Shiyu Liang, Yixuan Li, Jason Lee and R. Srikant.

Hyperspectral Unmixing: Insights and Beyond

|

Wing-Kin Ma |

Hyperspectral unmixing (HU) is one of the most prominent research topics in hyperspectral imaging in remote sensing. HU aims at identifying the underlying materials and their corresponding compositions in the scene, using the high spectral degrees of freedom of hyperspectral images. Early HU research is based on smart intuitions from remote sensing, and recent involvements from other fields—such as signal processing, optimization and machine learning—have substantially enriched HU techniques. This talk is a semi-tutorial of HU. We will look into what are the key insights of HU from a signal processing perspective, how such insights lead to a unique branch of theory and methods for structured matrix factorization, and why HU has strong connections to problems from other areas such as machine learning, data analytics, blind source separation, computer vision and biomedical imaging. If time permits, we will also have a quick tour on hyperspectral super-resolution, an emerging and fundamentally intriguing topic in which HU also plays a role.

Slides

Transforming Retailing Experiences with Artificial Intelligence

|

Bowen Zhou |

Artificial Intelligence (AI) is making big impacts in our daily life. In this talk, we will show how AI is transforming retail industry. In particular, we propose the brand-new concept of Retail as a Service (RaaS), where retail is redefined as the natural combination of content and interaction. With the capability of knowing more about consumers, products and retail scenarios integrating online and offline, AI is providing more personalized and comprehensive multimodal content and enabling more natural interactions between consumers and services, through the innovative technologies we invented at JD.com. We will show 1) how computer vision techniques can better understand consumers, help consumers easily discover products, and support multimodal content generation, 2) how the natural language processing techniques can be used to support intelligent customer services through emotion computing, 3) how AI is building the very fundamental technology infrastructure for RaaS.