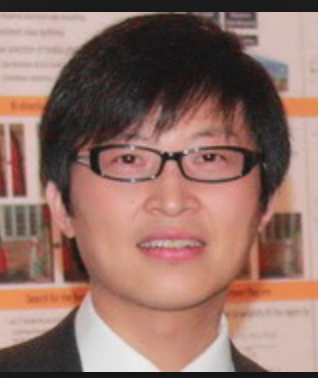

Technical Program

December 14th afternoon: Tutorial

December 15-16th full day: Invited talks

December 17th morning: Invited talks

Banquet will be arranged on December 15th night for all full registered members.

|

|

Tutorial

Sequential Decision Making under Uncertainty

|

Mykel Kochenderfer |

Many important problems involve decision making under uncertainty, including aircraft collision avoidance, wildfire management, and disaster response. When designing automated decision support systems, it is important to account for the various sources of uncertainty when making or recommending decisions. Accounting for these sources of uncertainty and carefully balancing the multiple objectives of the system can be very challenging. One way to model such problems is as a partially observable Markov decision process (POMDP). Recent advances in algorithms, memory capacity, and processing power, have allowed us to solve POMDPs for real-world problems. This tutorial will discuss models for sequential decision making and algorithms for solving them. In addition, this tutorial will highlight applications to decision making for drones and automated vehicles.

Invited talks

Object Oriented Convex Optimization with CVXPY

|

Stephen P. Boyd |

CVXPY is a widely used modeling framework in Python for convex optimization. CVXPY has become the foundation of a broader ecosystem of optimization software, including packages for distributed optimization, nonconvex optimization, and optimization in application areas such as finance, energy, and radiation treatment planning. These packages extend CVXPY and use convex optimization as a subroutine. In this talk we explain the basics of CVXPY and highlight several interesting CVXPY extensions.

Primal-dual splitting methods for convex optimization

|

Lieven Vandenberghe |

We discuss the Douglas-Rachford operator splitting method viewed as a primal-dual algorithm for large-scale convex optimization. The talk will start with applications to image deblurring problems that illustrate the versatility of the Douglas-Rachford method for primal-dual decomposition. The main part of the talk will be concerned with the important primal-dual hybrid gradient (PDHG) method, proposed by Esser, Zhang, and Chan, and by Pock, Cremers, Bischof, and Chambolle. This method is widely used in image processing, computer vision, and machine learning. It is known to include as a special case the Douglas-Rachford splitting algorithm for minimizing the sum of two convex functions. We will show that, conversely, the PDHG algorithm can be derived from the Douglas-Rachford splitting algorithm. We will also comment on the implications of this result for the convergence analysis of the PDHG algorithm and its extensions. (Joint work with Daniel O'Connor.)

Sampling Large Networks: Algorithms and Applications

|

John C.S. Lui |

Often times, large networks can be represented as graphs. For example, the Internet topology can be represented as an undirected graph while large logical networks (e.g., Facebook, Twitter,..etc) can be represented as either directed or undirected graphs. For these graphs, characterizing node pair relationships is important for applications such as friend recommendation and interest targeting in online social networks (OSNs). Due to the large scale nature of such networks, it is infeasible to enumerate all user pairs and so sampling is used. In this talk, we show that it is a great challenge even for OSN service providers to characterize user pair relationships when they possess the complete graph topology. The reason is that when sampling techniques (i.e., uniform vertex sampling and random walk) are naively applied, they can introduce large biases, in particular, for estimating similarity distribution of user pairs with constraints such as existence of mutual neighbors. Estimating statistics of user pairs is even more challenging in the absence of the complete topology information, since an unbiased sampling technique such as uniform vertex sampling is usually not allowed, and exploring the OSN graph topology is expensive. To address these challenges, we present asymptotically unbiased sampling methods to characterize user pair properties. We also show potential applications and discuss future research work.

Large gaps between cyclic and randomized orders in large-scale optimization

|

Ruoyu Sun |

A simple yet powerful idea for solving large-scale computational problems is to iteratively solve smaller subproblems. The applications of this idea include coordinate descent (CD), POCS (Projection onto Convex Sets) and ADMM. Most existing results are based on the sampling-with-replacement update order. We analyze the classical Gauss-Seidel order and the popular random permutation order (sampling-without-replacement). First, we show that the cyclic versions of G-SCDPOCS methods can be O(n^2) times slower than their randomized counterparts in the worst case. We also discuss several worst-case complexity bounds of these methods. Second, in an effort to extend the idea of decomposition to constrained problems, there has been much interest in analyzing multi-block ADMM. However, cyclic multi-block ADMM was recently found to be possibly divergent. We prove that RP-ADMM (randomly permuted ADMM) converges in expectation for solving linear systems. As byproducts, we propose a new Bernoulli randomization scheme. By establishing a weaker version of matrix AM-GM inequality, we show that RP-CD is at least n times faster than cyclic CD for solving quadratic problems.

Improved Optimization of Finite Sums with Minibatch Stochastic Variance Reduced Proximal Iterations

|

|

Tong Zhang |

We present a novel minibatch stochastic optimization method for empirical risk minimization problems. The method efficiently leverages variance reduced first-order and sub-sampled higher-order information to accelerate the convergence. We prove improved iteration complexity over state-of-the-art methods under suitable conditions. Experiments are provided to demonstrate the empirical advantage of the proposed method over existing approaches in the literature.

Approximate Counting via Correlation Decay

|

Pinyan Lu |

In this talk, I will survey some recent development of approximate counting algorithms based on correlation decay technique. Unlike the previous major approximate counting approach based on sampling such as Markov Chain Monte Carlo (MCMC), correlation decay based approach can give deterministic fully polynomial-time approximation scheme (FPTAS) for a number of counting problems. The algorithms have applications in statistical physics, machine learning, stochastic optimization and so on.

Cybersecurity: Challenges and Strategies

|

Enhui Yang |

Information security is of paramount importance to our Internet-based economy. As more things get smarter and connected to the Internet, we as humans become increasingly vulnerable. Without solid security solutions in place, our properties and lives could be at risk. Although many security solutions are offered on the market, they all fail to a large extent, as evidenced by the fact that 90% of all large institutions had data breaches and/or were held for ransom over the past 12 months. In this talk, we will first analyze why existing security solutions fail. Then we will introduce a philosophically different approach to security — data security: make your data and cyber activities coexist with all looming threats and continue to function normally even within compromised networks and systems. Key technologies in this approach include, among other things, (1) encryption with post-quantum secure key management, (2) smart integration of mandatory access control and encryption (SIME), (3) behavior intelligence and machine learning, and (4) policing drivers. Together, they protect your data against data breaches and any known and unknown attacks and secure your entire data cyberspace. They have been implemented and tested in real-world environments. Finally, we will conclude the talk with a live demo.

Structured Sparse Representation with Deep Convolution Networks

|

Hongkai Xiong |

Deep convolution networks have significantly facilitated sparse representation of high-dimensional signals, especially image and video sequences, with large-scale data-driven invariants for deformation stability, but it lacks sound mathematical ground for statistics and optimality associated with the signal sparsity. Instead of cascaded non-linearities, invariant scattering convolution networks introduced scattering wavelets with high-order coefficients to guarantee nonlinear invariants like deformation stability and translational invariance. This talk presents our recent works to incorporate deep convolutional networks and structured sparsity for scalable and compact representation of high-dimensional signals with varying non-stationary statistics. Specifically, directional multi-resolution convolution network, namely RDnet, is proposed to improve approximation of multi-scale high-dimensional signals by cascading radial and directional filter banks in a flexible manner. It allows multiscale and multidirectional decomposition, where each layer can be interpreted with a band-pass response in the frequency domain with a guarantee of perfect reconstruction. RDnet can be improved with a tree-structured transform to search the optimal structure based on the Information-theoretic optimization. Furthermore, structured sparsity is adopted for multiscale high-dimensional signals to yield a compact representation based on its geometry, i.e. the grouped sparsity within its subspaces of basis and tree sparsity along multiple frequency bands. Progressive optimization is leveraged to support scalable representation under such sparsities. It is also promising to incorporate structured sparsity with deep convolution networks to fit the statistics under consistencies and associations derived from the non-linear invariants. For example, convolutional sparse coding can be considered to exploit the consistency and association within high-dimensional signals beyond the sparsity and redundancy in approximation and reconstruction of the signals.

Learning Partial Differential Equations for Computer Vision and Image Processing

|

Zhouchen Lin |

Many computer vision and image processing problems can be posed as solving partial differential equations (PDEs). However, designing PDE system usually requires high mathematical skills and good insight into the problems. In this paper, we consider designing PDEs for various problems arising in computer vision and image processing in a lazy manner: learning PDEs from training data via optimal control approach. We first propose a general intelligent PDE system which holds the basic translational and rotational invariance rule for most vision problems. By introducing a PDE-constrained optimal control framework, it is possible to use the training data resulting from multiple ways (ground truth, results from other methods, and manual results from humans) to learn PDEs for different computer vision tasks. The proposed optimal control based training framework aims at learning a PDE-based regressor to approximate the unknown (and usually nonlinear) mapping of different vision tasks. The experimental results show that the learnt PDEs can solve different vision problems reasonably well. In particular, we can obtain PDEs not only for problems that traditional PDEs work well but also for problems that PDE-based methods have never been tried before, due to the difficulty in describing those problems in a mathematical way.

Deep Visual Understanding

|

Tao Mei |

Since computer vision was founded in 1960s, researchers have been dreaming of enabling a machine to describe what it sees. Recent development of deep learning has significantly boosted computer vision research. It was said that computer vision now has almost a 5-year-old human visual system. This capability has great potential to enable the natural conversation between human and computer. In this talk, we will briefly introduce recent vision techniques for visual understanding, including fine-grained image and video recognition, and vision to language.

Randomized Iterative Methods and Complexity for Markov Decision Process

|

Mengdi Wang |

Consider Markov decision processes with S states and A actions per state, which sits at the heart of reinforcement learning and stochastic control. We provide the first set of nearly-linear and sublinear running time upper bounds and nearly matching lower bounds for the discounted Markov decision problem. For the upper bounds, we propose a randomized linear programming algorithm that takes advantage of the linear duality between value and policy functions. The method achieves nearly-linear run time for general problems, and sublinear run-time for ergodic decision process when the input is given in specific ways. To further improve the complexity, we propose a randomized value iteration method that leverages the contractive property of the Bellman operator and variance reduction techniques. For the lower bound, we show that any algorithm needs at least Omega(S^2 A) run time to get a constant-error approximation to the optimal policy. Surprisingly, this lower bound reduces to Omega(SA/epsilon) when the input is specified in suitable data structures. The upper and lower bounds suggest that the complexity of MDP depends on data structures of the input. These results provide new complexity benchmarks for solving stochastic dynamic programs and two-person stochastic dynamic games.

Inpatient Overflow: An Approximate Dynamic Programming Approach

|

Jim Dai |

Hospital inpatient beds are usually grouped into several wards, with each dedicated to a “primary” medical specialty. When a patient waits too long to get a primary bed, a hospital manager may assign her to a non-primary bed, which is often undesirable. Deciding when to use such “overflow” practice in real time decision making can be challenging. We develop an approximate dynamic programming (ADP) algorithm to aid the decision making. A key step is to choose appropriate basis functions. We use a novel combination of fluid control and single-pool approximation to devise such functions. We demonstrate, via extensive numerical experiments in realistic hospital settings, that our ADP algorithm is remarkably effective in finding good overflow policies. This is joint work with Pengyi Shi at Purdue University.

Robust Decision Making under Uncertainty for Safety Critical Applications

|

Mykel Kochenderfer |

A common mathematical model for sequential decision making under uncertainty is the partially observable Markov decision process (POMDP). Although this model has been well studied for at least half a century, POMDPs have not yet seen widespread use in engineering applications due to the challenges of computing optimal solutions. Recent advances in both algorithms and hardware have started to enable the application of POMDP formulations to the design of autonomous and semi-autonomous systems. This talk will discuss recent research using POMDPs for safety systems for aircraft and automobiles and will outline the challenges of validating such systems before they are deployed.

Maximizing Machine Learning Performance with Heterogeneous Computing Resources

|

Weifeng Zhang |

Machine learning has been widely used in our day-to-day life, from online shopping, city traffic monitoring, voice assistant, to personalized news recommendation. Vast amount of data, consumed by offline training and online inference, and increasingly complex machine learning models have imposed enormous challenges to the traditional computer architectures. Data centers are witnessing a rapid shift of computation paradigm from homogeneous CPU architectures to heterogeneous architectures including GPUs, FPGAs, and more recently application specific accelerators. In this talk, I will discuss some of these challenges, analyze the performance bottlenecks, and present a few of general approaches how to exploit application characteristics and computing heterogeneity to maximize machine learning performance.

Optical Circuit Switching by Default, Indirect Routing over Circuits for Adaptation

|

Bill Lin |

As Internet traffic continues to grow unabated at an exponential rate, driven in part by the increasing adoption of 4K UHD streaming, IoT devices, and cloud computing, it is unclear whether existing packet-routing network architectures based on electronic routers will continue to scale at the necessary pace. On the other hand, optical fiber and switching elements have demonstrated an abundance of capacity that appears to be unmatched by electronic routers. In this talk, I will present an optical networking architecture based on a paradigm of coarse optical circuit switching by default and adaptive indirect routing over circuits with spare capacity. In this architecture, long-duration quasi-static optical circuits are provisioned between edge routers at the boundary of the network to carry the traffic by default. When the provisioned optical circuit is inadequate, excess traffic is re-routed through circuits with spare capacity. In particular, by adaptively load balancing across circuits with spare capacity, excess traffic is routed to its final destination without the need to create circuits on the fly. I will describe provisioning algorithms based on the statistical analysis of historical traffic patterns and adaptive load-balancing algorithms that guarantee throughput optimality under the virtual network topology induced by the optical circuits.

Learning to Optimize: Training Deep Neural Networks for Wireless Resource Management

|

Mingyi Hong |

In this work, we propose a new learning-based approach for wireless resource management. The key idea is to treat the input and output of a resource allocation algorithm as an unknown non-linear mapping and to use a deep neural network (DNN) to approximate it. If the non-linear mapping can be learned accurately and effectively by a DNN of moderate size, then such DNN can be used for resource allocation in almost real time, since passing the input through a DNN to get the output only requires a small number of simple operations. In this work, we first discuss a few theoretical issue related to this approach. We characterize a class of ‘learnable algorithms’ and then design DNNs to approximate some algorithms of interest in wireless communications. Further, we rigorously characterize how the approximation error scale as a function of the size of DNN. Finally, we use extensive numerical simulations to demonstrate the superior ability of DNNs for approximating a state-of-the-art algorithm that is designed for power allocation in wireless transmit signal design, while giving orders of magnitude speedup in computational time.

Decentralized Signal and Information Processing over Networks

|

Qing Ling |

The deluge of networked big data calls for efficient signal and information processing algorithms over networks, subject to relatively limited computation and communication resources. Mathematically the signal and information processing task can be modeled as an optimization problem where the network nodes collaboratively minimize an aggregate cost function under network constraints. Decentralized optimization is a novel computation scheme to solve this problem, in which each node can only communicate with its neighbors during the optimization process. Such a computation scheme avoids a data fusion center or long-distance communication and offers better load balance to the network. This talk focuses on the decentralized consensus optimization problem and proposes a decentralized exact first-order algorithm (abbreviated as EXTRA). EXTRA has the best known convergence rates among the existing first-order decentralized algorithms. If the local cost functions are convex and have Lipschitz continuous gradients, EXTRA has an O(1/k) ergodic convergence rate in terms of the first-order optimality residual. If the aggregate cost function is also (restricted) strongly convex, EXTRA converges to an optimal solution at a linear rate.