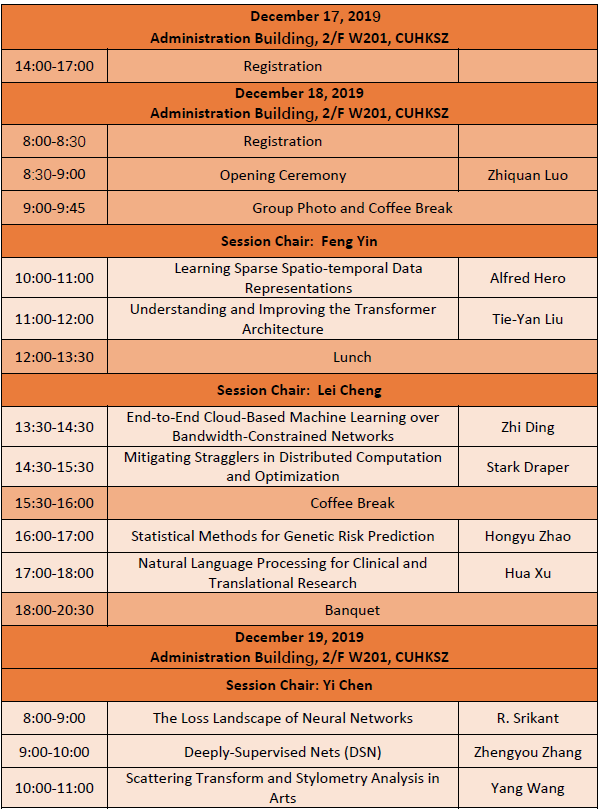

Technical Program

December 18th-19th full day: Invited talks at The Chinese University of Hong Kong, Shenzhen

December 20th morning: Invited talks at The Chinese University of Hong Kong, Shenzhen

Banquet will be arranged on December 18th night for all full registered members.

|

|

Transportaion to CUHKSZ on 2019/12/18 - 2019/12/20:

• Line 1: 7:30am set off from the Coli Hotel.

Invited talks

Learning Sparse Spatio-temporal Data Representations

|

Alfred Hero |

Learning from spatio-temporal data is one of the major outstanding problems in machine learning due to the paucity of spatio-temporal data models that are both expressive and parsimonious. Structured representations of spatio-temporal data have been proposed that use tensor factorizations of the covariance matrix. One representation that is used for Gaussian data is the Kronecker structured matrix normal model for the inverse covariance. In this talk we introduce an approach to ultra sparse Kronecker representation of spatio-temporal processes based on Cartesian product graphs and Sylvester equations.

Understanding and Improving the Transformer Architecture

|

Tie-Yan Liu |

The Transformer is a state-of-the-art deep learning model, especially for NLP tasks. In this talk, we will present a deep analysis on the architecture of Transformer, aiming at identifying problems with the current design and proposing new architectures with better performance. First, we find that the placement of layer normalization in the popular implementation of the Transformer is provably problematic. It makes the optimization unstable, and the training of this Transformer model cannot live without a slow warm-up stage (using a very small but increasing learning rate in the beginning). By correcting the placement, we can safely remove the warm-up stage and significantly speed up the training of the Transformer model. Second, we find that with the residual connections, the architecture of the Transformer can be viewed as a numeric solver of a specific ordinary differential equation (ODE) in a multi-particle dynamic system. By leveraging more effective numerical ODE solvers, we can obtain a new network architecture (called MacaronNet), which may potentially perform better. Extensive experiments show that MacaronNet indeed significantly outperforms the standard Transformer on many tasks. At last, we will discuss other aspects on which we can further improve the Transformer.

End-to-End Cloud-Based Machine Learning over Bandwidth-Constrained Networks

|

Zhi Ding |

JPEG2000 (j2k) is a highly popular format for image and video compression. With the rapidly growing applications of cloud based image classification, most existing j2k-compatible schemes would stream compressed color images from the source before reconstruction at the processing center as inputs to deep CNNs. We propose to remove the computationally costly reconstruction step by training a deep CNN image classifier using the CDF 9/7 Discrete Wavelet Transformed (DWT) coefficients directly extracted from j2k-compressed images. We demonstrate additional computation savings by utilizing shallower CNN to achieve classification of good accuracy in the DWT domain. Furthermore, we show that traditional augmentation transforms such as flipping/shifting are ineffective in the DWT domain and present different augmentation transformations to achieve more accurate classification without any additional cost. This way, faster and more accurate classification is possible for j2k encoded images without image reconstruction. Through experiments on CIFAR-10 and Tiny ImageNet data sets, we show that the performance of the proposed solution is consistent for image transmission over limited channel bandwidth.

Mitigating Stragglers in Distributed Computation and Optimization

|

Stark Draper |

The availability of big data and the use of these data in training artificial intelligence (AI) systems is changing the way companies do business in a wide swath of industries, from finance to entertainment to drug discovery. These advances rely on a computing, networking, and data storage infrastructure, the growing capabilities of which are key to realizing the promise of AI. However, as data sets and processing requirements scale up massively, classic single-processor computing paradigms cannot keep up. There has therefore been a renaissance in understanding how to bring large-scale parallelization to bear on algorithmic design for learning-specific workloads. In this talk we describe some themes of research that address how to parallelize tasks when the underlying computing fabric is non-ideal. In particular, we consider unpredictable delays in the completion of allocated tasks by individual worker nodes (often termed “stragglers”) and in the communication amongst the nodes. We discuss the wider literature and focus on a particular “anytime” minibatch approach to distributed optimization (ICLR’19) that we have developed in our group. Given time we will also mention some of our work in coded computing for large-scale matrix-matrix multiplication (ISIT’18, CWIT’19).

Statistical Methods for Genetic Risk Prediction

|

Hongyu Zhao |

Accurate prediction of disease risk based on genetic and other factors is an important goal in human genetics research and precision medicine. Well calibrated prediction models will lead to more effective disease screening, prevention and treatment strategies. Despite the identification of thousands of disease-associated genetic variants through genome-wide association studies (GWAS) in the past decade, accuracy of genetic risk prediction remains moderate for most diseases, which is largely due to the challenges in both identifying all the functionally relevant variants and accurately estimating their effect sizes. In this presentation, we will discuss a number of methods that have been developed in recent years to improve prediction accuracy from jointly estimating effect sizes, incorporating functional annotations, and leveraging genetic correlations among complex diseases. We will demonstrate the utilities of these methods through their applications to a number of complex diseases in large population cohorts, e.g. the UK Biobank data. This is joint work with Yiming Hu, Wei Jiang, Yixuan Ye, Qiongshi Lu, and others.

Natural Language Processing for Clinical and Translational Research

|

Hua Xu |

Over the past few decades, growing use of Electronic Health Records (EHRs) systems has established large practice-based clinical datasets, which are emerging as valuable resources for clinical and translational research. One of the major challenges of using EHR for clinical research is that much of detailed patient information is embedded in narrative reports. This presentation will describe our recent development of natural language processing (NLP) methods and software for extracting phenotypic information from clinical text in EHR, as well as how such NLP methods and tools can be used to support clinical research, such as drug outcome studies.

The Loss Landscape of Neural Networks

|

R. Srikant |

Neural networks have proved to be remarkably successful in many applications ranging from image classification to complex decision making. Underlying this success is the ability to train a neural network using data collected for each application. Such training involves the minimization of a loss function, which is a non-convex function with high-dimensional input. Multiple techniques are used to achieve a good network architecture after training; these include overparameterization, regularization, and the choice of the algorithm for performing the minimization. By focusing on the landscape of the loss function, we will present some examples to show why such training may be successful despite the nonconvexity of the optimization problem. Joint work with Shiyu Liang and Ruoyu Sun.

Deeply-Supervised Nets (DSN)

|

Zhengyou Zhang |

Our proposed deeply-supervised nets (DSN) method simultaneously minimizes classification error while making the learning process of hidden layers direct and transparent. We make an attempt to boost the classification performance by studying a new formulation in deep networks. Three aspects in convolutional neural networks (CNN) style architectures are being looked at: (1) transparency of the intermediate layers to the overall classification; (2) discriminativeness and robustness of learned features, especially in the early layers; (3) effectiveness in training due to the presence of the exploding and vanishing gradients. We introduce “companion objective” to the individual hidden layers, in addition to the overall objective at the output layer (a different strategy to layer-wise pre-training). We extend techniques from stochastic gradient methods to analyze our algorithm. The advantage of our method is evident and our experimental result on benchmark datasets shows significant performance gain over existing methods. This is a joint work with Zhuowen Tu, Chen-Yu Lee, Saining Xie, Patrick Gallagher at University of California, San Diego (UCSD).

Learning via Non-Convex Min-Max Games

|

Meisam Razaviyayn |

Recent applications that arise in machine learning have surged significant interest in solving min-max saddle point games. This problem has been extensively studied in the convex-concave regime for which a global equilibrium solution can be computed efficiently. In this talk, we study the problem in the non-convex regime and show that an ε–first order stationary point of the game can be computed when one of the player’s objective can be optimized to global optimality efficiently. We discuss the application of the proposed algorithm in defense agains adversarial attacks to neural networks, generative adversarial networks, fair learning, and generative adversarial imitation learning.

Multitask Regression and Flood Forecasting

|

Ami Wiesel |

In this talk, we will discuss recent advances in multitask regression and their application to flood forecasting. We will begin with a brief overview of Google’s flood forecasting initiative in India. The project involves time series prediction in different geographical locations based on a limited number of heavy tailed samples. To address these challenges, we will consider non-convex robust multitask models based on elliptical distributions. Next, we will present a spectral algorithm for learning shared features for multiple related tasks. Both works are accompanied by theoretical analysis and experiments with real world data.

Scattering Transform and Stylometry Analysis in Arts

|

Yang Wang |

With the rapid advancement in data analysis and machine learning, stylometry analysis in arts has gained considerable interest in recent years. A fundamental topic of research in stylometry is the detection of art forgery. But unlike many other machine learning applications, we typically face the challenge of not having enough data. In this talk I'll discuss how scattering transform can be applied to stylometry analysis, and demonstrate its effectiveness on Van Gogh paintings as well as another data set.

Speech Processing at Cocktail Party

|

Haizhou Li |

Humans have a remarkable ability to pay their auditory attention only to a sound source of interest, that we call selective listening, in a multi-talker environment or a Cocktail Party. However, signal processing approach to speech separation and/or speaker extraction from multi-talker speech remains a challenge for machines. In this talk, we study the deep learning solutions to monaural speech separation and speaker extraction that enable selective listening, speech recognition, speaker recognition at Cocktail Party. We discuss the computational auditory models, technical challenges and the recent advances in the field.

Optimization in Today's Telecom Technology Service- Applications and Challenges

|

Dong Zhang |

As 5G is rapidly developing, telecom carriers and service provides wish to improve their efficiency/service performance, to be more competitive in the market. Optimization is a key technology to achieve this goal. In this talk, I will first introduce several typical optimization scenarios in GTS, such as 5G station base station location selection, IP+Fiber network planning and optimization, on-site staff scheduling and so on. Second, I will briefly talk about our preliminary attempt to solve these problems. Finally, I will discuss some challenges recognized in these scenarios.

Deep Probabilistic Autoencoder and Its Applications

|

Bo Chen |

To build a flexible and interpretable model for data analysis, in this talk we introduce our recent work, deep probabilistic autoencoder, that uses a hierarchy of gamma distributions to construct its multi-stochastic-layer generative network. In order to provide scalable posterior inference for the parameters of the generative network and efficiently infer the local latent representations via the inference network, we develop a scalable hybrid Bayesian inference method consisting of layer-adaptive stochastic gradient Riemannian MCMC and a Weibull upward-downward variational encoder. Based on the fundamental fully-connected deep model, we show its deep dynamic and convolutional variants on different tasks, such as supervised modeling, image-text joint modeling and radar HRRP target recognition.

Fog-networks and its Technologies

|

Jiangzhou Wang |

Due to the demand for rapid traffic growth, cloud radio access network is being evolved to fog networks. This talk will introduce fog-networks and present its key technologies, including machine learning and mobile edge computing technologies. Recent research results in fog networks will be presented.

Machine Learning for Wireless Communications

|

Mérouane DEBBAH |

Mobile cellular networks are becoming increasingly complex to manage while classical deployment/optimization techniques are cost-ineffective and thus seen as stopgaps. This is all the more difficult considering the extreme constraints of beyond 5G networks in terms of data rate (around 1Tb/s), massive connectivity (1O/m2), latency (under 1ms) and energy efficiency. Unfortunately, the development of adequate solutions is severely limited by the scarcity of the actual resources (energy, bandwidth and space). Recently, the community has turned to a new resource known as Artificial Intelligence at all layers of the network to exploit the increasing computing power afforded by the improvement in Moore's law in combination with the availability of huge data. This is an important paradigm shift which considers the increasing data flood/huge number of nodes as an opportunity rather than a curse. In this tutorial, we will discuss through various examples the on-going AI architecturesand Algorithms for the design of Next Generation Intelligent Networks.

Medical Image Analysis and Surgical Simulation – AI and VR Applications for Medicine

|

Pheng Ann Heng |

There are many successfully applications of deep learning in solving challenging and difficult problems in recent years. An excellent example is its application in medical image analysis. Our group is the first to employ 3D convolutional neural networks for automatic detection of cerebral micro-bleeds from MR images. We also proposed multi-level contextual 3D convolutional neural network framework for false positive reduction in automated pulmonary nodule detection. We further proposed a novel and efficient 3D CNN equipped with a 3D deep supervision mechanism to comprehensively address the challenges of optimization difficulties of 3D networks and inadequacy of medical training samples. Our successful deep learning applications cover a wide spectrum of medical image modalities, include histopathological imaging, ultrasound imaging, MR/CT imaging, dermoscopy imaging colonoscopy videos and surgical videos. Concurrently, there are also many significant and promising developments in virtual reality that are applicable for medical applications. Virtual reality based surgical simulation can provide a cost-effective and efficient way to train novices. In order to achieve the goal of delivering specialized training of a surgical procedure, one practical solution is to construct a realistic virtual environment through intelligent integration of medical imaging, motion tracking, physically based simulation, haptic feedback and visual rendering. In this talk, I shall present our recent works in using deep learning for medical image analysis and introduce some VR-based surgical simulators we have developed.

Parametric Fokker-Planck Equations

|

Hongyuan Zha |

Fokker-Planck equation describes the temporal evolution of the marginal probability law of a SDE. In this talk, we derive the Fokker-Planck equation on a parametrized statistical manifold based on the Wasserstein gradient flow of relative entropy. We then pull back the PDE to a finite dimensional ODE on the parameter space. We use normalizing flow to derive a numerical algorithm for solving the parametric Fokker-Planck equation. Some numerical examples in higher dimensional space are provided. Error analysis and convergence analysis are also established. We additionally make connections to GAN and information-geometric optimization.