科研项目

Brain-inspired auditory attention

Project description/goals

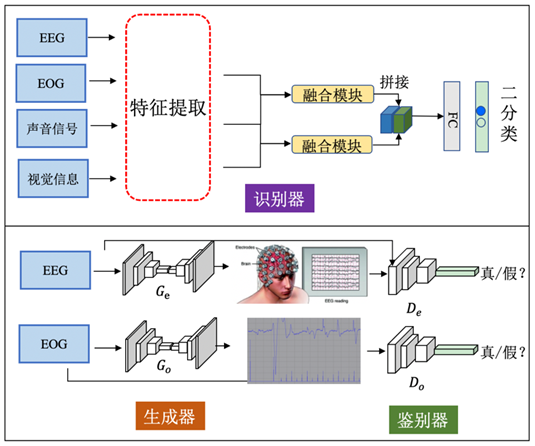

We formulate a computational model that emulates human cognitive process in a 'cocktail party’with an aim to achieve selective auditory attention in real-world acoustic environment. We will advance the state-of-the-art in both theory and practice, and develop prototypes for video conferencing and hearing aids applications.

Importance/impact, challenges/pain points

The state-of-the-art speech technologies such as speech and speaker recognition, computer-assisted speech communication only work in a quiet, single talker acoustic environment. The success of this project will mark an important milestone for deployments of speech technologies in complex real-life acoustic environment such as multi-talker and noise-heavy cocktail party.

Solution description

- A system that extracts one’s voice from a multi-talker noisy environment based on the listener’s eye gaze or brain signals.

- Key contribution/commercial implication

- The world’s first hearing aids with eye/brain steered auditory attention.

Next steps

Large scale data collection in realistic environment

Collaborators/partners

U Bremen Germany, NUS Singapore

Team/contributors

Haizhou Li, Siqi Cai, Meng Ge